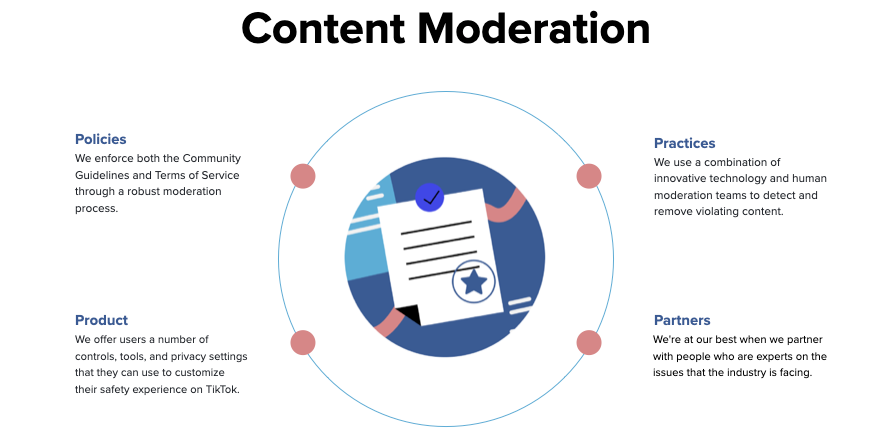

In March, TikTok revealed its plans to open Transparency and Accountability Centers in Los Angeles and Washington DC. Due to Coronavirus, physical visits have been put on hold for now. TikTok has instead revealed its new virtual Transparency Center and invited select experts on virtual tours to learn and ask questions about the platform’s safety and security practices, and reveal insights into how the platform works.

A key part of the Transparency and Accountability Center is focused on how TikTok’s moderators evaluate content and accounts that are escalated by machine learning and user reports, giving visitors an in-depth look at its safety classifiers and the deep learning models that identify harmful content in addition to how the engine ranks content to support moderation teams with their evaluations.

Vice president and head of US public policy Michael Beckerman said in a blog post that once physical centers can be visited, guests will be able to use TikTok’s moderation platform, review and label sample content, experiment with detection models and will be able to sit in the seat of a content moderator.

TikTok will also share details on how its recommendation engine works and how user experience and safety are built into those recommendations. “This includes sharing how our systems work to diversify content in the For You feed and additional insights into how content is tailored to the preferences of each user,” said Beckerman.

Finally, Beckerman wrote: “Under the leadership of our Chief Security Officer, Roland Cloutier – who has decades of experience in law enforcement and the financial services industry – our teams work to protect our community’s information and stay ahead of evolving security challenges.”

TikTok says its virtual experience shows visitors how it stores TikTok user data in the US and safeguard that data from hackers and other threats with encryption and the latest technology. The platform also details how it works with industry-leading third-party experts to test and validate security processes.